Research

Current research interest includes:

- Statistical learning theory for multimodal learning

- Multimodal learning with unpaired modalities

- Multimodal linguistic unit discovery

- Generative models

* denotes equal contribution.

|

|

|

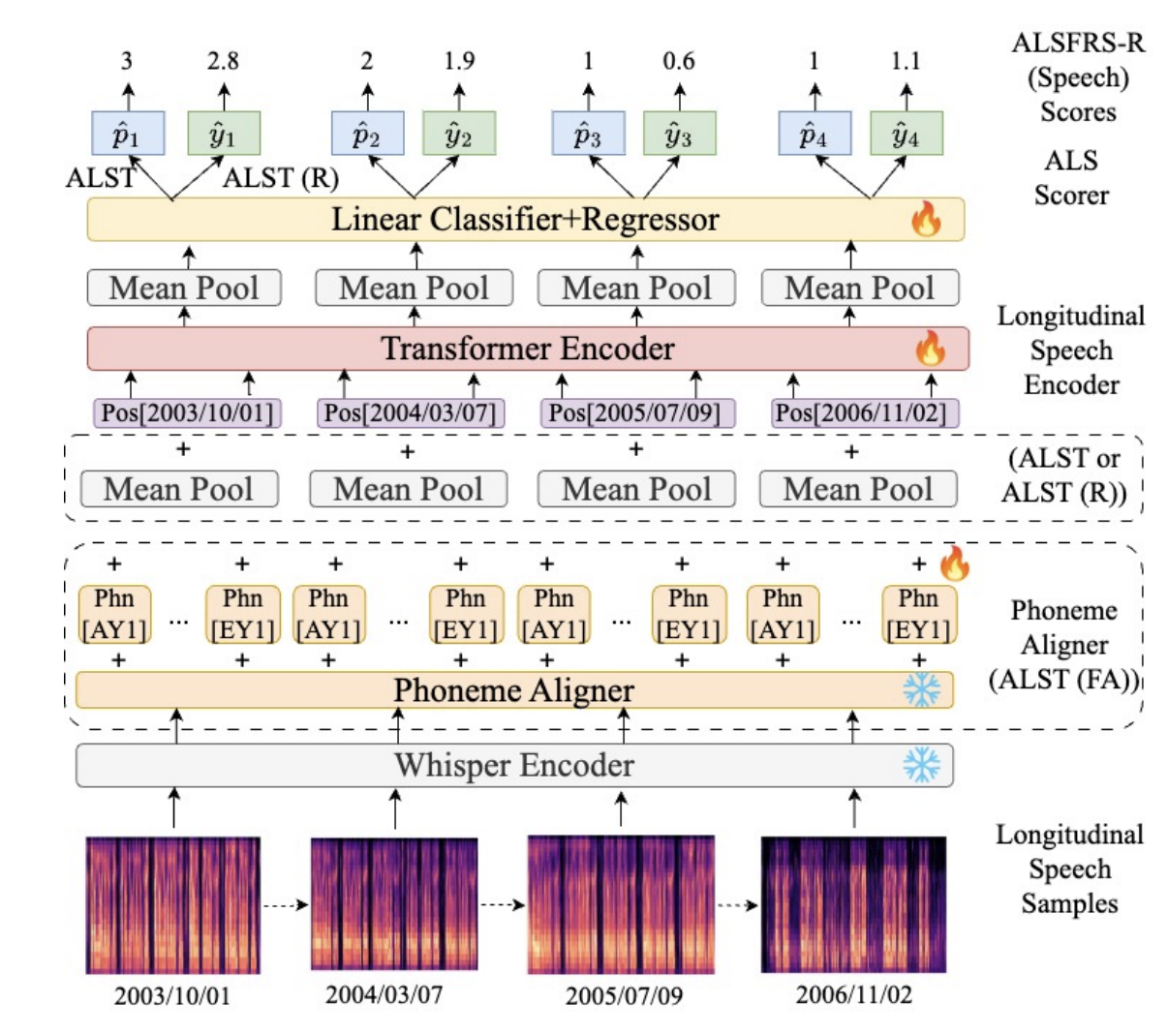

Automatic Prediction of Amyotrophic Lateral Sclerosis Progression using Longitudinal Speech Transformer

Liming Wang,

Yuan Gong,

Nauman Dawalatabad,

Marco Vilela,

Katerina Placek,

Brian Tracey,

Yishu Gong,

Fernando Vieira,

James Glass

Interspeech, 2024

project page

/

arXiv

|

|

|

Towards Unsupervised Speech Recognition without Pronunciation Models

Junrui Ni,

Liming Wang,

Yang Zhang,

Kaizhi Qian,

Heting Gao,

Mark Hasegawa-Johnson,

Chang D. Yoo

In submission, 2024

project page

/

arXiv

|

|

|

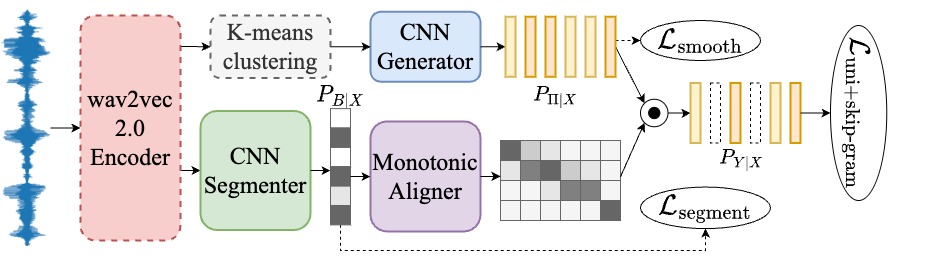

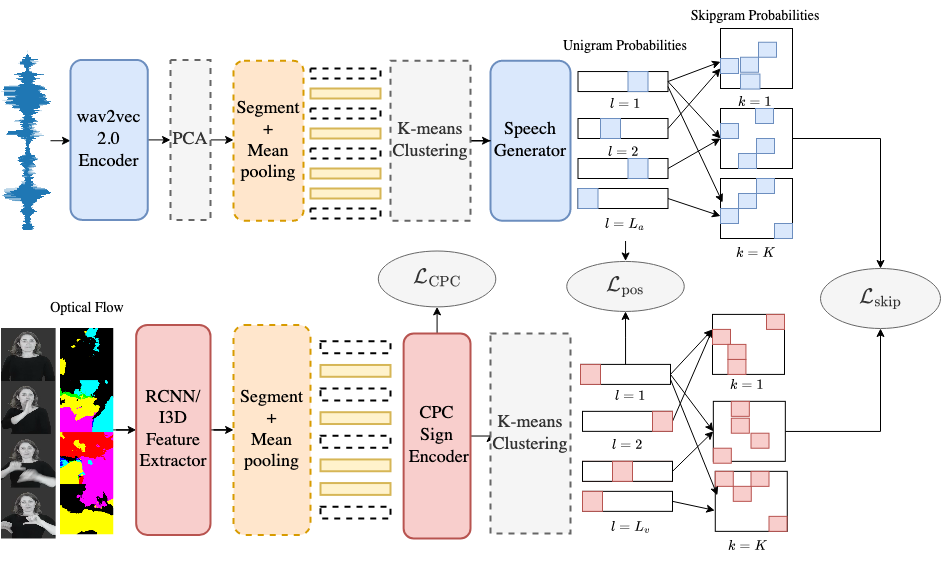

Unsupervised Speech Recognition with N-skipgram and Positional Unigram Matching

Liming Wang,

Mark Hasegawa-Johnson,

Chang D. Yoo

International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2024

project page

/

arXiv

|

|

|

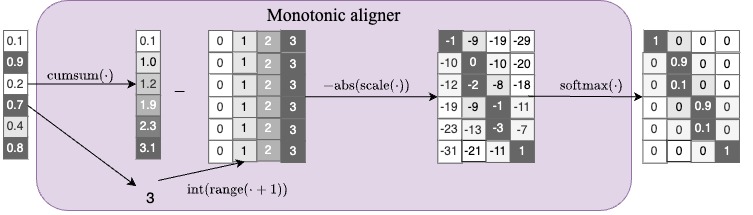

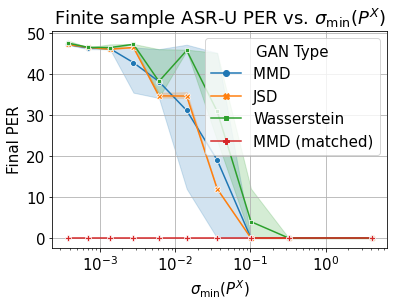

A Theory of Unsupervised Speech Recognition

Liming Wang,

Mark Hasegawa-Johnson,

Chang D. Yoo

ACL, 2023

project page

/

arXiv

|

|

|

Listen, Decipher and Sign: toward Unsupervised Speech-to-Sign Language Recognition

Liming Wang,

Junrui Ni,

Heting Gao,

Jialu Li,

Kai Chieh Chang,

Xulin Fan,

Junkai Wu,

Mark Hasegawa-Johnson,

Chang D. Yoo

ACL (Findings), 2023

project page

/

arXiv

|

|

|

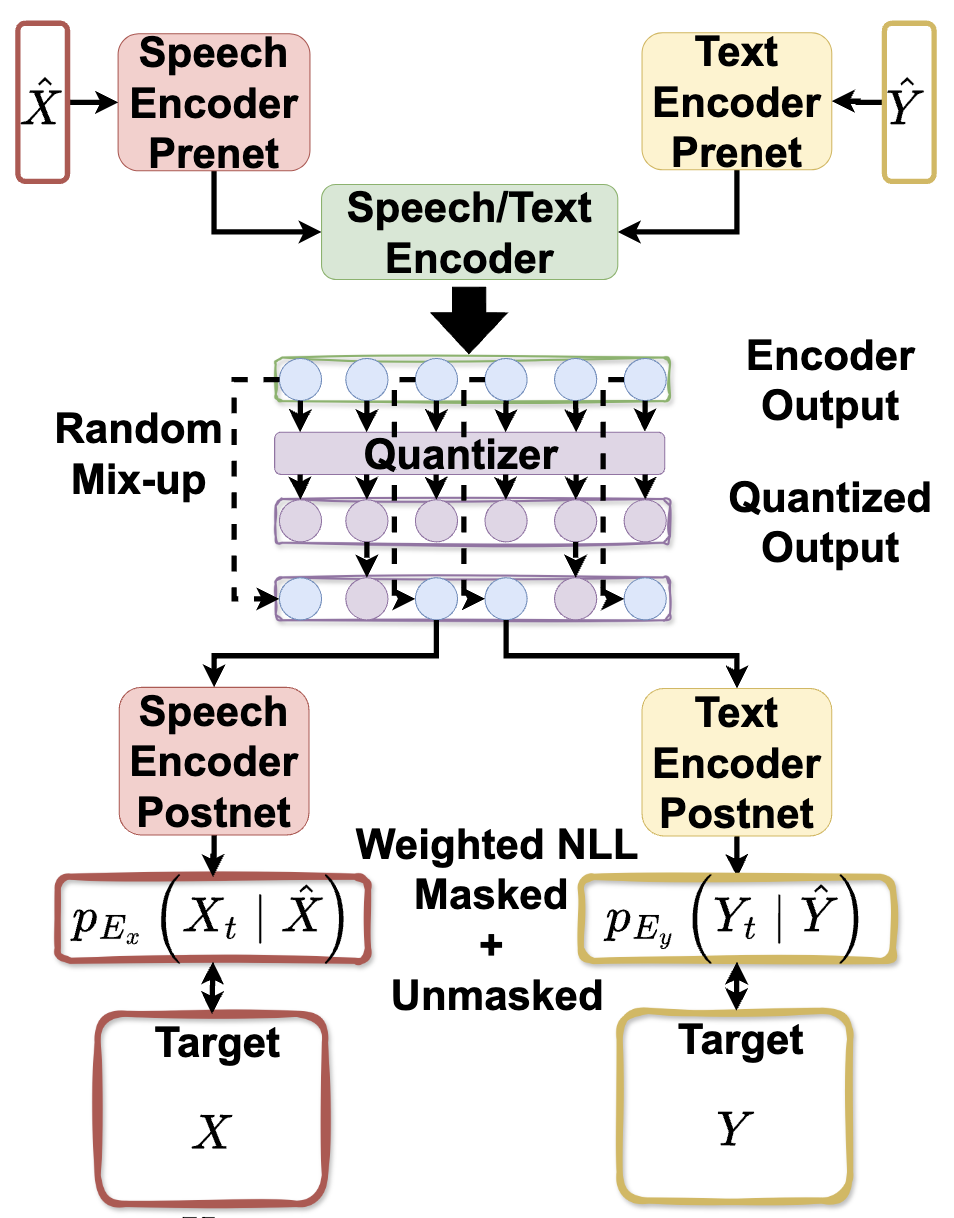

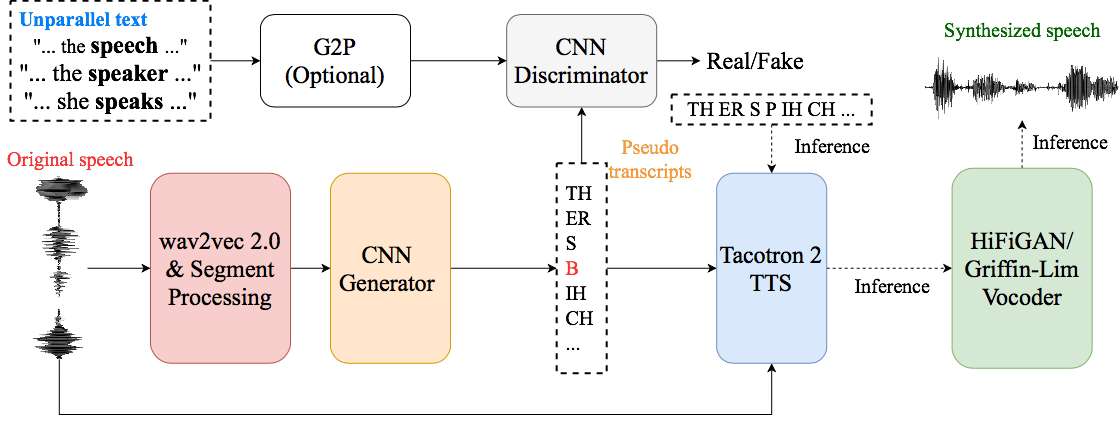

Unsupervised Text-to-Speech Synthesis by Unsupervised Automatic Speech Recognition

Junrui Ni*,

Liming Wang*,

Heting Gao*,

Kaizhi Qian,

Yang Zhang,

Shiyu Chang,

Mark Hasegawa-Johnson

Interspeech, 2022

project page

/

arXiv

|

|

|

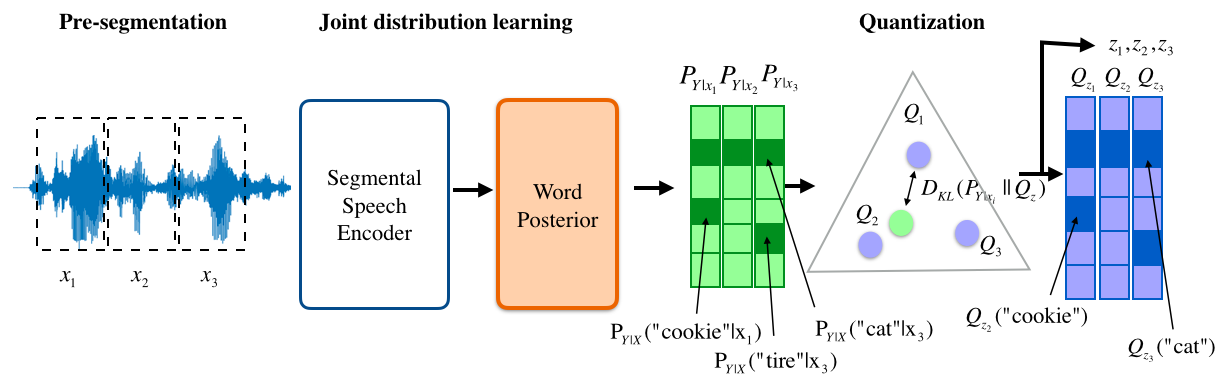

Self-supervised Semantic-driven Phoneme Discovery for Zero-resource Speech Recognition

Liming Wang,

Siyuan Feng,

Mark Hasegawa-Johnson,

Chang D. Yoo

ACL, 2022

project page

/

arXiv

|

|

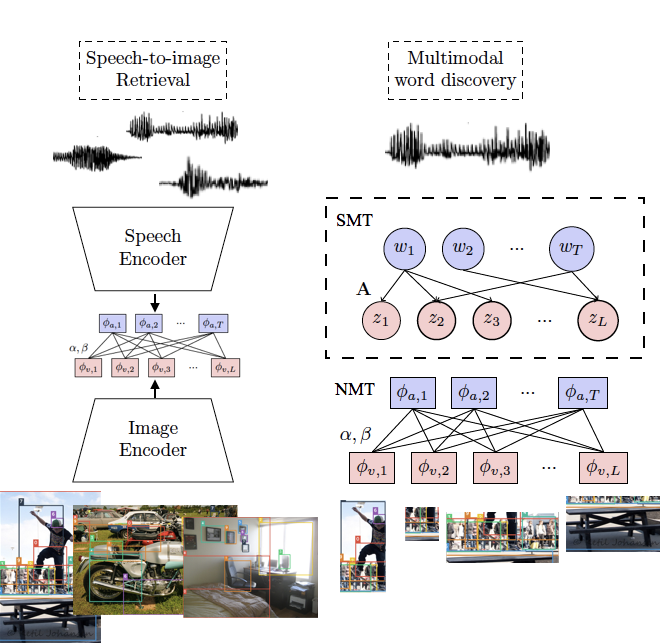

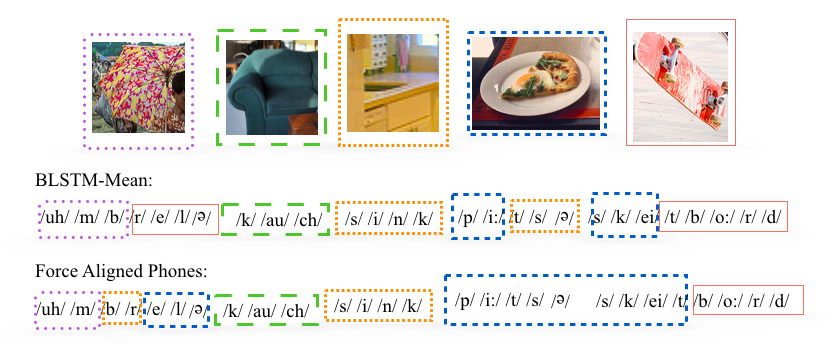

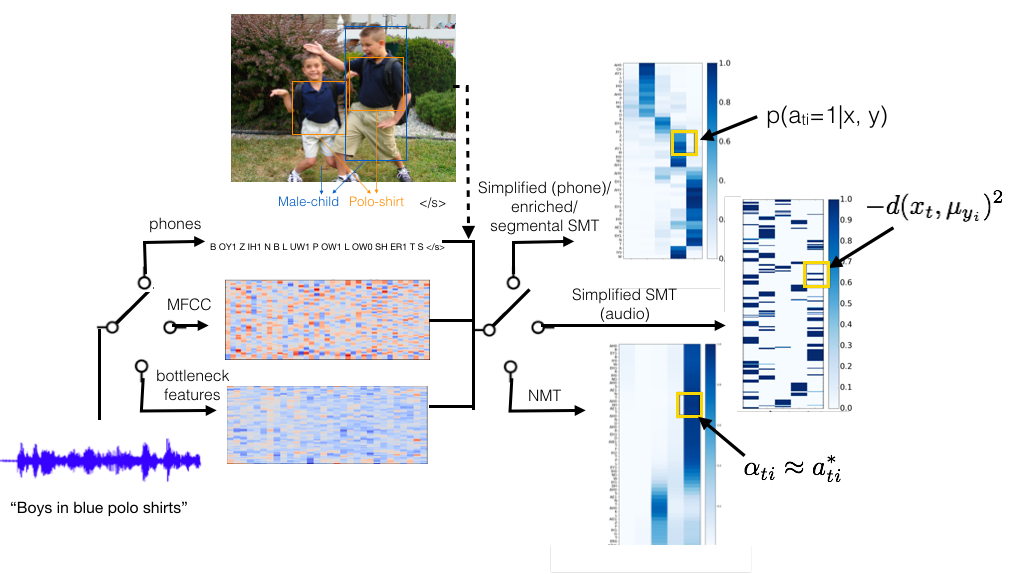

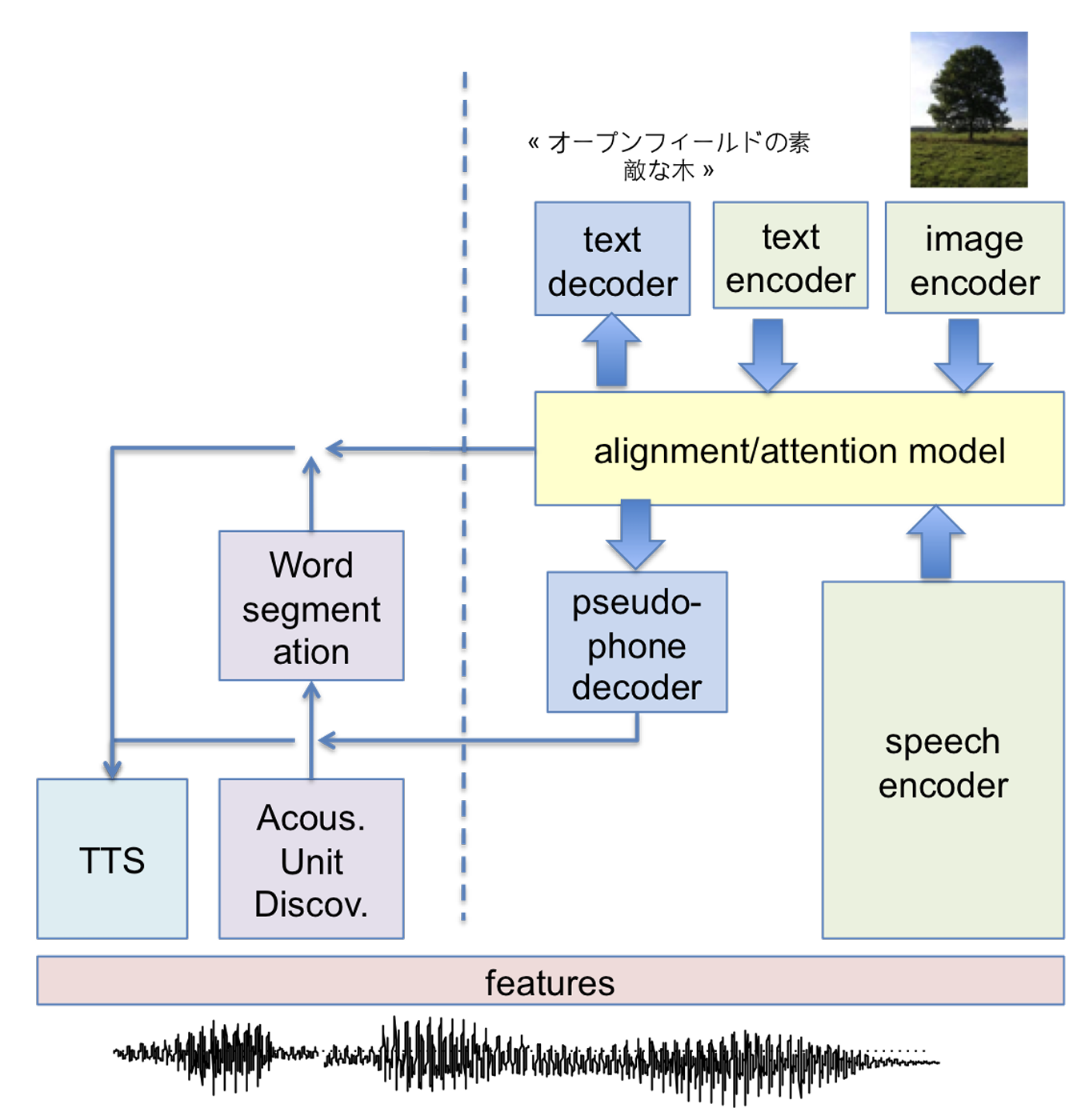

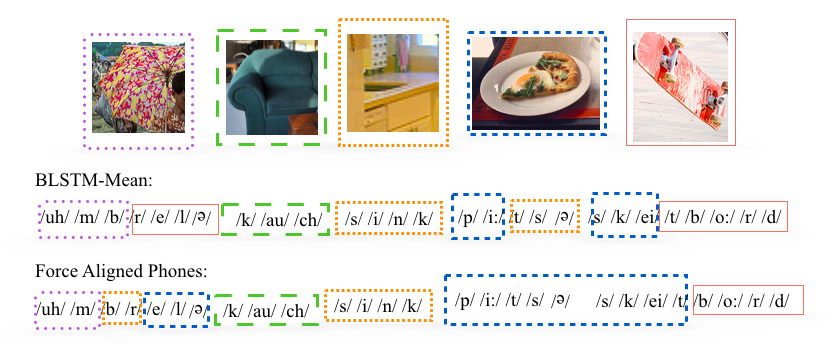

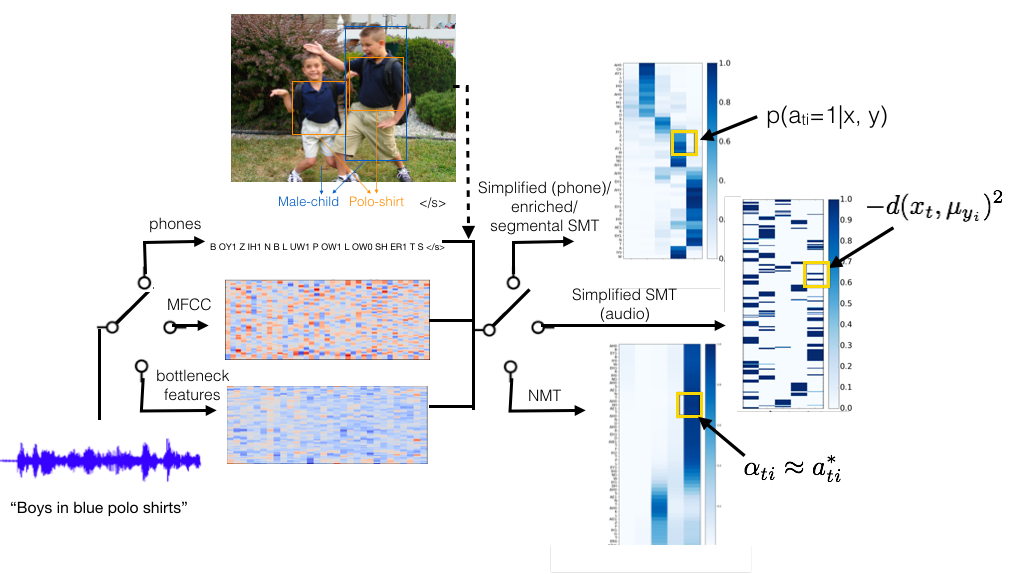

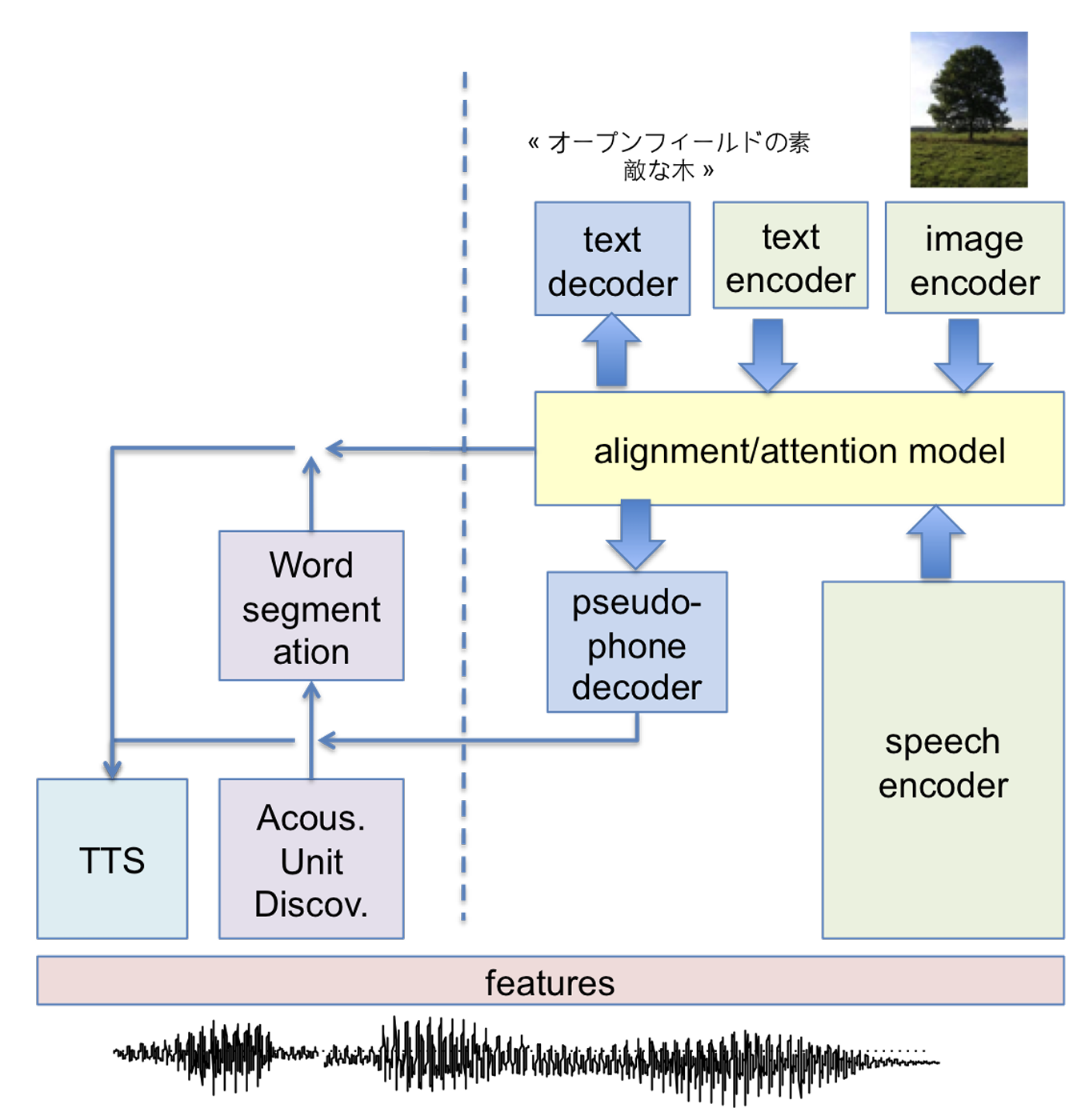

A Translation Framework for Multimodal Spoken Unit Discovery

Liming Wang,

Mark Hasegawa-Johnson,

Asilomar Conference on Signals, Systems, and Computers, 2021

|

|

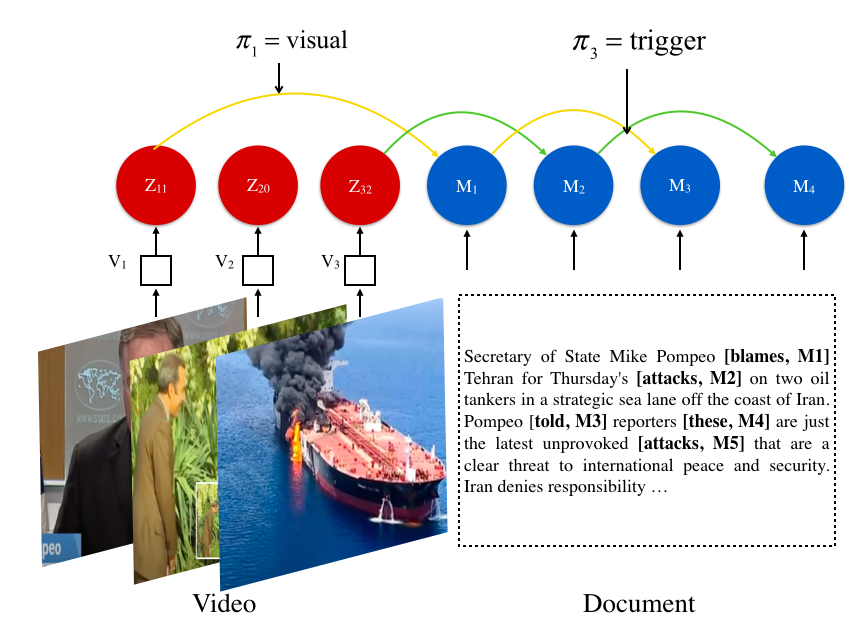

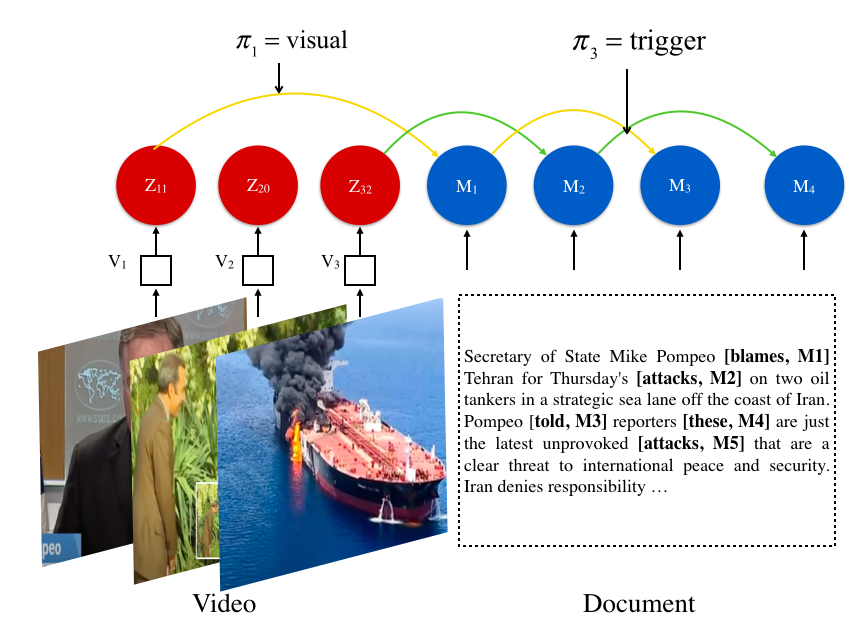

Coreference by Appearance: Visually Grounded Event Coreference Resolution

Liming Wang,

Shengyu Feng,

Xudong Lin,

Manling Li,

Heng Ji,

Shih-Fu Chang

The Fourth Workshop on Computational Models of Reference, Anaphora and Coreference (CRAC), 2021.

|

|

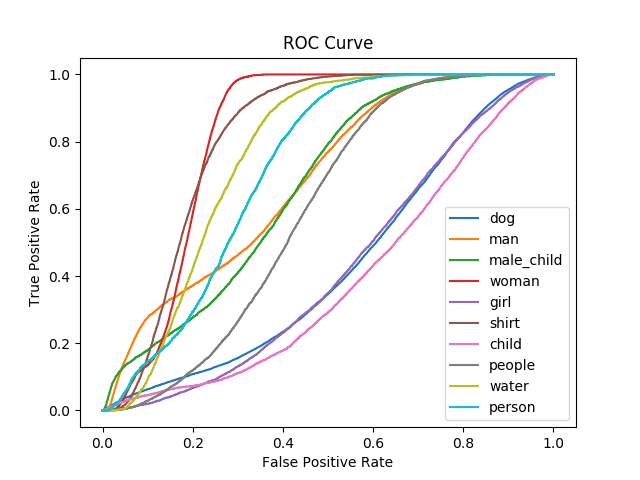

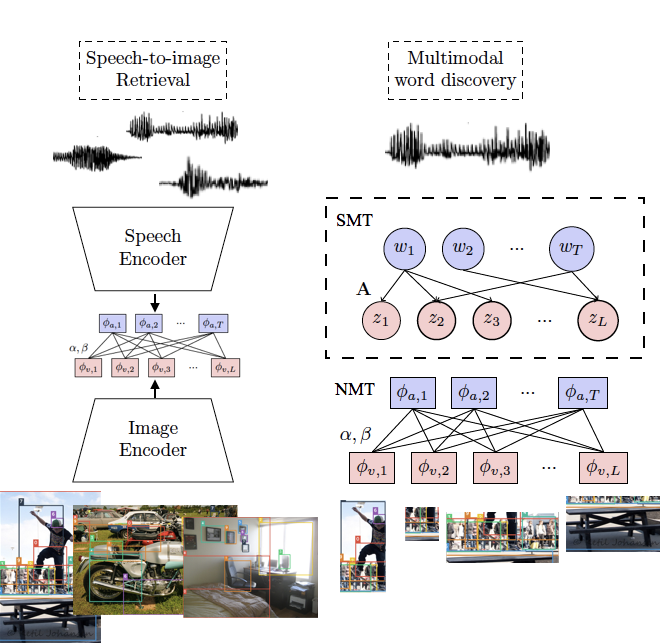

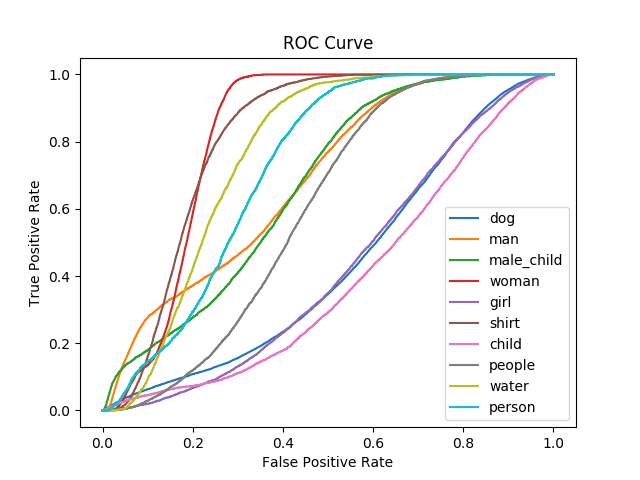

Align or Attend? Toward more Efficient and Accurate Spoken Word discovery Using Speech-to-Image Retrieval

Liming Wang,

Xinsheng Wang,

Mark Hasegawa-Johnson,

Odette Scharenborg,

Najim Dehak

ICASSP, 2021

project page

/

arXiv

|

|

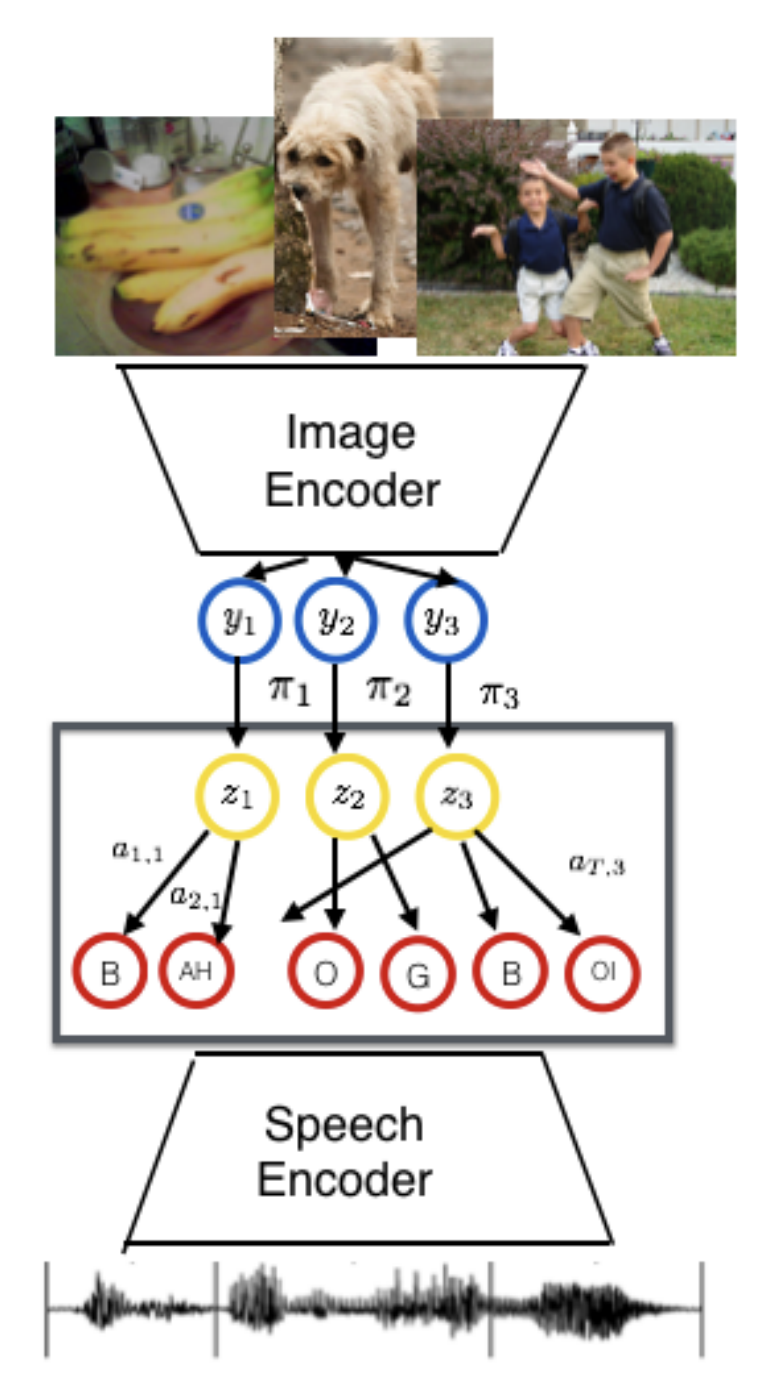

A DNN-HMM-DNN Hybrid Model for Discovering Word-like Units from Spoken Captions and Image Regions

Liming Wang,

Mark Hasegawa-Johnson

Interspeech, 2020

project page

/

arXiv

|

|

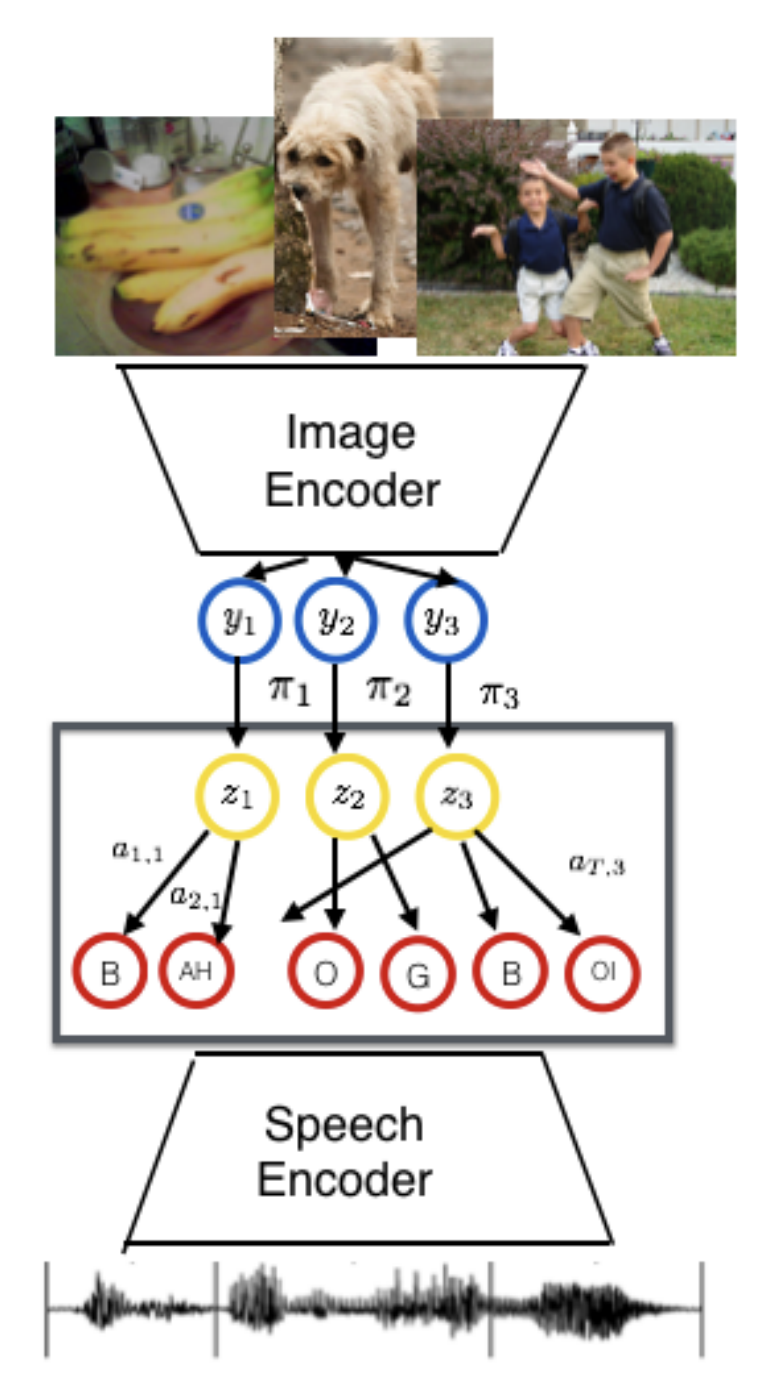

Multimodal Word Discovery with Spoken Descriptions

and Visual Concepts

Liming Wang,

Mark Hasegawa-Johnson

TASLP, 2020

project page

/

arXiv

|

|

Multimodal Word Discovery with Phone Sequence and Visual Concepts

Liming Wang,

Mark Hasegawa-Johnson

Interspeech, 2019

project page

/

arXiv

|

|

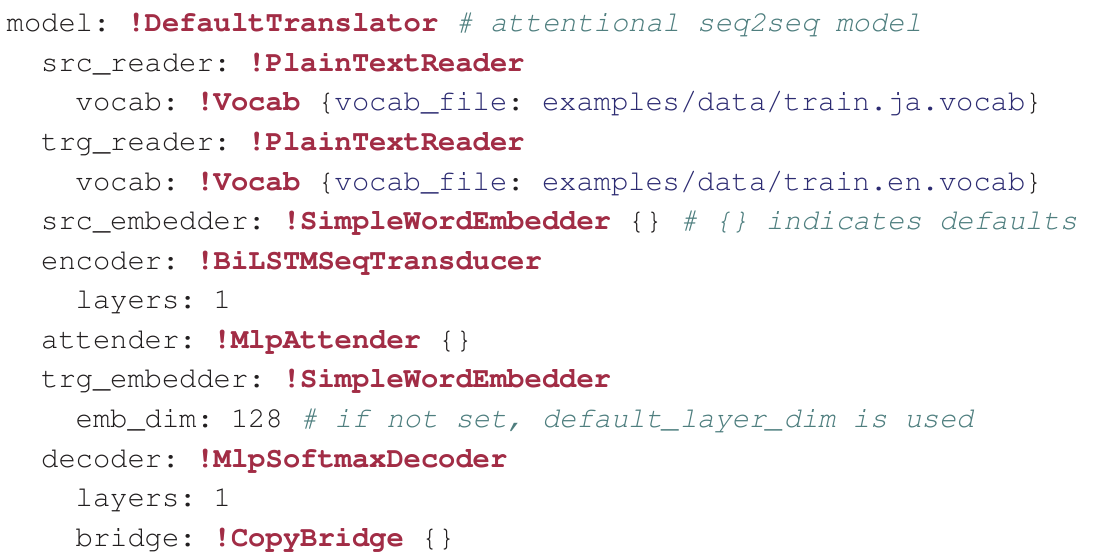

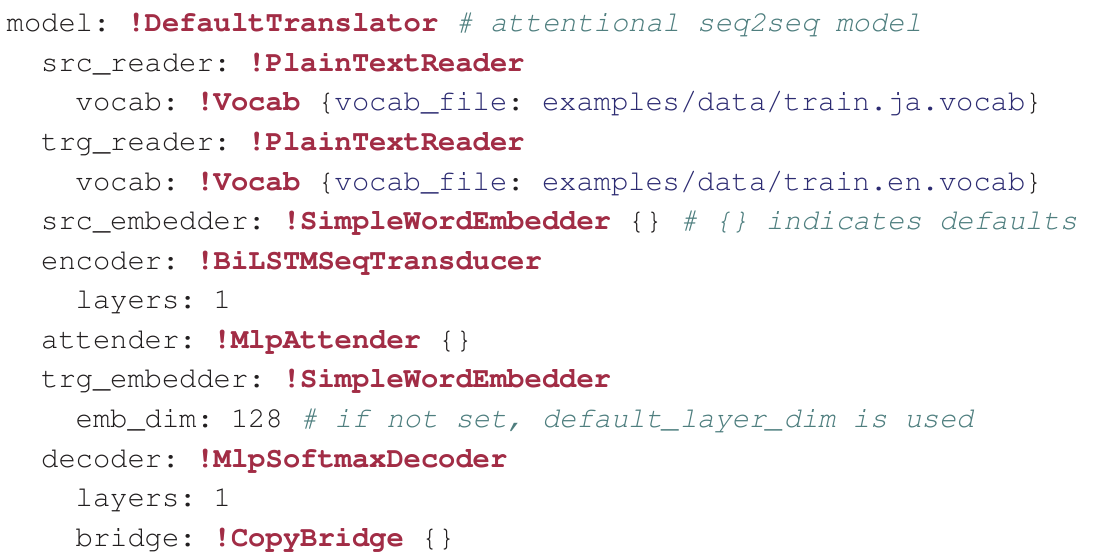

XNMT: The eXtensible Neural Machine Translation Toolkit

Graham Neubig,

Matthias Sperber,

Xinyi Wang,

Matthieu Felix,

Austin Matthews,

Sarguna Padmanabhan,

Ye Qi,

Devendra Sachan,

Philip Arthur,

Pierre Godard,

John Hewitt,

Rachid Riad,

Liming Wang

AMTA, 2018

project page

/

arXiv

|

|

Linguistic Unit Discovery from Multimodal Inputs in Unwritten Languages: Summary of the "Speaking Rosetta" JSALT 2017 Workshop.

Odette Scharenborg,

Laurent Besacier,

Alan Black,

Mark Hasegawa-Johnson,

Florian Metze,

Graham Neubig,

Sebastian St¨uker,

Pierre Godard,

Markus M¨uller,

Lucas Ondel,

Shruti Palaskar,

Philip Arthur,

Francesco Ciannella,

Mingxing Du,

Elin Larsen,

Danny Merkx,

Rachid Riad,

Liming Wang,

Emmanuel Dupoux

ICASSP, 2018

project page

/

arXiv

|

|